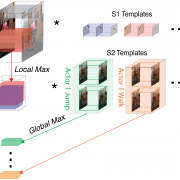

The human brain can rapidly parse a constant stream of visual input. The majority of visual neuroscience studies, however, focus on responses to static, still-frame images. Here we use Magnetoencephalography (MEG) decoding and a computational model to study invariant action recognition in videos. We created a well-controlled, naturalistic dataset to study action recognition across different views and actors. We find that, like objects, actions can also be read out from MEG data in under 200 ms (after the subject has viewed only 5 frames of video). Action can also be decoded across actor and viewpoint, showing that this early representation is invariant. Finally, we developed an extension of the HMAX model, inspired by Hubel and Wiesel’s findings of simple and complex cells in primary visual cortex as well as a recent computational theory of the feedforward invariant systems, which is traditionally used to perform size- and position-invariant object recognition in images, to recognize actions. We show that instantiations of this model class can also perform recognition in natural videos that are robust to non-affine transformations. Specifically, view-invariant action recognition and action invariant actor identification in the model can be achieved by pooling across views or actions, in the same manner and model layer as affine transformations (size and position) in traditional HMAX. Together these results provide a temporal map of the first few hundred milliseconds of human action recognition as well as a mechanistic explanation of the computations underlying invariant visual recognition.

Theoretical Frameworks for Intelligence

Understanding intelligence and the brain requires theories at different levels, including the biophysics of single neurons, algorithms and circuits, overall computations and behavior, and a theory of learning. Advances have been made in many of these areas from multiple perspectives in the past few decades. In fact several major contributors to these advances are members of our team.

This theoretical foundation provides a common framework for fields as diverse as computer science, cognitive science, and neuroscience. Recent successes in intelligent systems applications – from Google to Watson – would not have been possible without these developments. For the first time, we have the beginnings of a unifying and useful mathematics of brains, minds, and machines with rigorous foundations, demonstrated applicability in almost every area of cognitive and neural science, and real practical value for building intelligent systems.