Semester:

- Fall 2024

Course Level:

- Graduate

Understanding human intelligence and how to replicate it in machines is arguably one of the greatest problems in science. Learning, its principles and computational implementations, is at the very core of intelligence. During the last two decades, for the first time, artificial intelligence systems have been developed that begin to solve complex tasks, until recently the exclusive domain of biological organisms, such as computer vision, speech recognition or natural language understanding: cameras recognize faces, smart phones understand voice commands, smart chatbots/assistants answer questions and cars can see and avoid obstacles. The machine learning algorithms that are at the roots of these success stories are trained with examples rather than programmed to solve a task. This has been a once-in-a-time paradigm shift for Computer Science: shifting from a core emphasis on programming to training-from-examples. This course -- the oldest on ML at MIT -- has been pushing for this shift from its inception around 1990. However, a comprehensive theory of learning is still incomplete, as shown by the several puzzles of deep learning. An eventual theory of learning that explains why deep networks work and what their limitations are, may thus enable the development of more powerful learning approaches and perhaps inform our understanding of human intelligence.

In this spirit, the course covers foundations and recent advances in statistical machine learning theory, with the dual goal a) of providing students with the theoretical knowledge and the intuitions needed to use effective machine learning solutions and b) to prepare more advanced students to contribute to progress in the field. This year the emphasis is again on b).

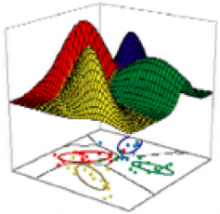

The course is organized about the core idea of a parametric approach to supervised learning in which a powerful approximating class of parametric class of functions -- such as Deep Neural Networks -- is trained -- that is, optimized on a training set. This approach to learning is, in a sense, the ultimate inverse problem, in which sparse compositionality and stability play a key role in ensuring good generalization performance.