Invariance in visual cortex neurons as defined through deep generative networks

Leader: Carlos Ponce

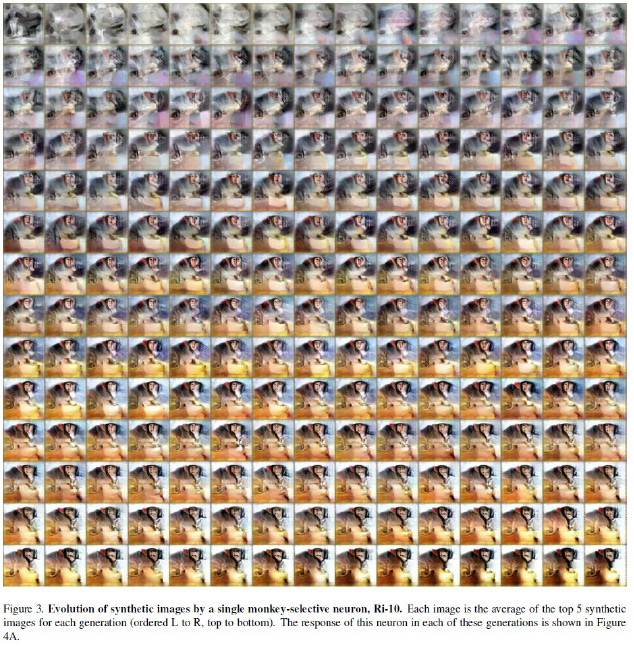

We study cells in the visual cortex of macaque monkeys, and our goal is to discover how invariant representations arise in object recognition areas (including V1, V2, V4 and inferotemporal cortex or IT). There are two specific questions that must be answered to identify the cortical algorithms that create invariant representations: 1) what visual information any individual neuron/population represents, and 2) how the neuron learns this representation across nuisance changes, like size, translations or rotations. In collaboration with Dr. Gabriel Kreiman, Dr. Margaret Livingstone and other members of CBMM, we recently used generative adversarial neural networks (GANs) and genetic algorithms to identify shapes encoded by neurons in IT and V1, generating images that elicit neuronal firing rate responses beyond those evoked by natural images. We were surprised to discover that most individual neurons and multiunit sites encoded complex scene fragments, containing diagnostic shapes frequently present in monkey faces (e.g. convex borders surrounding small dark spots), bodies and places (e.g. rectilinear borders). In follow-up experiments, we challenged the neurons to respond to their own synthetic stimuli after we introduced changes in size, position and rotation, and found that the neurons had limited invariance, showing high firing rate variability across the transformed synthetic images relative to those for transformed natural images. Our goal under the present grant is to test if strongly invariant representations in neurons can be discovered using GANs.

We study cells in the visual cortex of macaque monkeys, and our goal is to discover how invariant representations arise in object recognition areas (including V1, V2, V4 and inferotemporal cortex or IT). There are two specific questions that must be answered to identify the cortical algorithms that create invariant representations: 1) what visual information any individual neuron/population represents, and 2) how the neuron learns this representation across nuisance changes, like size, translations or rotations. In collaboration with Dr. Gabriel Kreiman, Dr. Margaret Livingstone and other members of CBMM, we recently used generative adversarial neural networks (GANs) and genetic algorithms to identify shapes encoded by neurons in IT and V1, generating images that elicit neuronal firing rate responses beyond those evoked by natural images. We were surprised to discover that most individual neurons and multiunit sites encoded complex scene fragments, containing diagnostic shapes frequently present in monkey faces (e.g. convex borders surrounding small dark spots), bodies and places (e.g. rectilinear borders). In follow-up experiments, we challenged the neurons to respond to their own synthetic stimuli after we introduced changes in size, position and rotation, and found that the neurons had limited invariance, showing high firing rate variability across the transformed synthetic images relative to those for transformed natural images. Our goal under the present grant is to test if strongly invariant representations in neurons can be discovered using GANs.