By Jeremy Hsu

Posted 15 Feb 2016

A recent IEEE article covers Prof. Shimon Ullman's research on the digital baby project.

Excerpt:

"Can artificial intelligence evolve as human baby does, learning about the world by seeing and interacting with its surroundings? That’s one of the questions driving a huge cognitive psychology experiment that has revealed crucial differences in how humans and computers see images.

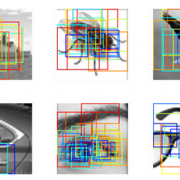

The study has tested the limits of human and computer vision by examining each one’s ability to recognize partial or fuzzy images of objects such as airplanes, eagles, horses, cars, and eyeglasses. Unsurprisingly, human brains proved far better than computers at recognizing these “minimal” images even as they became smaller and harder to identify. But the results also offer tantalizing clues about the quirks of human vision—clues that could improve computer vision algorithms and eventually lead to artificial intelligence that learns to understand the world the way a growing toddler does.

“The study shows that human recognition is both different and better performing compared with current models,” said Shimon Ullman, computer scientist at the Weizmann Institute of Science in Rehovot, Israel. “We think that this difference [explains the inability] of current models to analyze automatically complex scenes—for example, getting details about actions performed by people in the image, or understanding social interactions between people.” "

The article covers work published in a PNAS paper: Atoms of recognition in human and computer vision

by Shimon Ullmana Liav Assifa Ethan Fetayaa, and Daniel Harari

Link to read this paper: http://www.pnas.org/content/early/2016/02/09/1513198113