Children learn to describe what they see through visual observation while overhearing incomplete linguistic descriptions of events and properties of objects. We have in the past made progress on the problem of learning the meaning of some words from visual observation and are now extending this work in several ways. This prior work required that the descriptions be unambiguous, meaning that they have a single syntactic parse. We are aiming at learning from ambiguous descriptions: simultaneously learning the meanings of the words while discovering the correct parse of the sentence. Ultimately, we aim for this work to simultaneously acquire a lexicon and a parser through visual observation.

Vision and Language

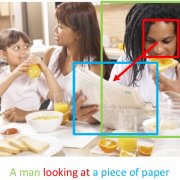

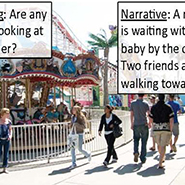

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.