Semester:

- Fall 2021

Course Level:

- Graduate, Undergraduate

[6.804 counts as an AUS for Course 6 in the AI concentration. Graduate students (seeking H-level or AAGS credit) should take 9.660.]

An introduction to computational theories of human cognition, and the computational frameworks that could support human-like artificial intelligence (AI). Our central questions are: What is the form and content of people's knowledge of the world across different domains, and what are the principles that guide people in learning new knowledge and reasoning to reach decisions based on sparse, noisy data? We survey recent approaches to cognitive science and AI built on these principles:

* World knowledge can be described using probabilistic generative models; perceiving, learning, reasoning and other cognitive processes can be understood as Bayesian inferences over these generative models.

* To capture the flexibility and productivity of human cognition, generative models can be defined over richly structured symbolic systems such as graphs, grammars, predicate logics, and most generally probabilistic programs.

* Inference in hierarchical models can explain how knowledge at multiple levels of abstraction is acquired.

* Learning with adaptive data structures allows models to grow in complexity or change form in response to the observed data.

* Approximate inference schemes based on sampling (Monte Carlo) and deep neural networks allow rich models to scale up efficiently, and may also explain some of the algorithmic and neural underpinnings of human thought.

We will introduce a range of modeling tools, including core methods from contemporary AI and Bayesian machine learning, as well as new approaches based on probabilistic programming languages. We will show how these methods can be applied to many aspects of cognition, including perception, concept learning and categorization, language understanding and acquisition, common-sense reasoning, decision-making and planning, theory of mind and social cognition. Lectures will focus on the intuitions behind these models and their applications to cognitive phenomena, rather than detailed mathematics. Recitations will fill in mathematical background and give hands-on modeling guidance in several probabilistic programming environments, including Church, Webppl and PyMc3.

Assignments: 4 problem sets and a final modeling project. No exams.

Prerequisites: (1) Basic probability and statistical inference as you would acquire in 9.014, 9.40, 18.05, 18.600, 6.008, 6.036, 6.041, or 6.042, or an equivalent class. If you have not taken one of these classes, please talk to the instructor after the first day. (2) Previous experience with programming, especially in Matlab, Python, Scheme or Javascript, which form the basis of the probabilistic programming environments we use. Also helpful would be previous exposure to core problems and methods in artificial intelligence, machine learning, or cognitive science.

—————————————————————————————————

Specific topics to be covered this year will follow roughly this sequence:

* Prelude: Two approaches to intelligence - Pattern recognition versus Modeling the world. Learning about AI by playing video games.

* Foundational questions: What kind of computation is cognition? How does the mind get so much from so little? How is learning even possible? How can you learn to learn?

* Introduction to Bayesian inference and Bayesian concept learning: Flipping coins, rolling dice, and the number game.

* Human cognition as rational statistical inference: Bayes meets Marr's levels of analysis. Case studies in modeling surface perception and predicting the future.

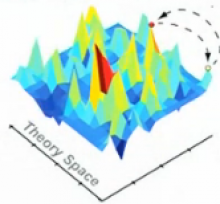

* Modeling and inference tradeoffs, or “Different ways to be Bayesian”: Comparing humans, statisticians, scientists, and robots. The sweet spot for intelligence: Fast, cheap, approximate inference in rich, flexible, causally structured models.

* Probabilistic programming languages: Generalizations of Bayesian networks (directed graphical models) that can capture common-sense reasoning. Modeling social evaluation and attribution, visual scene understanding and common-sense physical reasoning.

* Approximate probabilistic inference schemes based on sampling (Markov chain Monte Carlo, Sequential Monte Carlo (particle filtering)) and deep neural networks, and their use in modeling the dynamics of attention, online sentence processing, object recognition and multiple object tracking.

* Learning model structure as a higher-level Bayesian inference, and the Bayes Occam's razor. Modeling visual learning and classical conditioning in animals.

* Hierarchical Bayesian models: a framework for learning to learn, transfer learning, and multitask learning. Modeling how children learn the meanings of words, and learn the basis for rapid ('one-shot') learning. Building a machine that learns words like children do.

* Probabilistic models for unsupervised clustering: Modeling human categorization and category discovery; prototype and exemplar categories; categorizing objects by relations and causal properties.

* Nonparametric Bayesian models - capturing the long tail of an infinitely complex world: Dirichlet processes in category learning, adaptor grammars and models for morphology in language.

* Planning with Markov Decision Processes (MDPs): Modeling single- and multi-agent decision-making. Modeling human 'theory of mind' as inverse planning.

* Modeling human cognitive development - how we get to be so smart: infants' probabilistic reasoning and curiosity; how children learn about causality and number; the growth of intuitive