Projects: Theoretical Frameworks for Intelligence

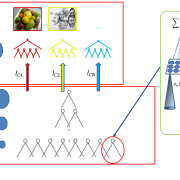

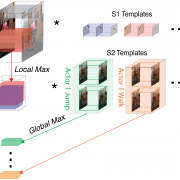

Following a recent theory of the ventral stream of visual cortex (Amselmi and Poggio. 2014), which conjectures that invariance is the crux of object recognition, we investigate models that learn invariant representations of the visual world in an unsupervised fashion for face and object recognition. We aim to account for neurophysiological, developmental and psychophysical observations.

The project focuses on the problem of unsupervised learning of image representations capable of significantly lower the sample complexity of recognition.

The aim of the project is to give a solid mathematical grounding for a computational theory of the feedforward path of the ventral stream in visual cortex based on the hypothesis that its main function is the encoding of invariant representations of images.

Recognition of sounds and speech from a small number of labelled examples (like humans do), depends on the properties of the sensory data representation. A theory for the unsupervised learning of invariant representations for vision, is extended to the auditory domain. The goal is developing algorithms and computational models for speech recognition in machines while formulating hypotheses for learning and processing mechanisms in the human auditory cortex.

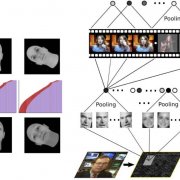

Recognizing actions from dynamic visual input is an important component of social and scene understanding. The majority of visual neuroscience studies, however, focus on recognizing static objects in artificial settings. Here we apply magnetoencaphalography (MEG) decoding and a computational model to a novel data set of natural movies to provide a mechanistic explanation of invariant action recognition in human visual cortex.

The central nervous system transforms external stimuli into neural representations (hereafter “reps” for brevity), which form the basis for behavior learning and action selection [1]. Progress in neurophysiology, especially in the last decade, has begun to reveal aspects of the neural reps employed by humans and other animals [2–5]. Parallel progress in machine learning has produced numerical methods for automatically learning neural reps, used by artificial systems, that successfully inform behavior learning [6–8] and action selection [6–9].

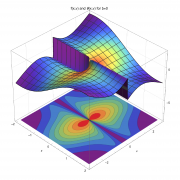

Mounting evidence shows humans combine uncertain sensory information with prior knowledge to make inferences and decisions in a Bayesian manner. We seek to reconcile Bayesian theories of cognition with the apparent complexity of inference and learning by demonstrating neurally-plausible mechanisms and exploring their theoretical limitations.

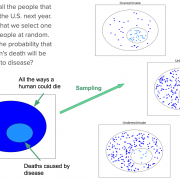

How do people draw inferences over a large number of possible latent causes? We present a stochastic hypothesis generation algorithm that samples the combinatorially large space of "generate-able" hypotheses and use it to model several biases and features of human statistical inference.

Current hierarchical models of object recognition lack the non-uniform resolution of the retina, and they also typically neglect scale. We show that these two issues are intimately related, leading to several predictions. We further conjecture that the retinal resolution function may represent an optimal input shape, in space and scale, for a single feed-forward pass. The resulting outputs may encode structural information useful for other aspects of the CBMM challenge, beyond the recognition of single objects.

Euclidean plane geometry builds on intuitions so compelling that they were believed for centuries to have the force of logical necessity. Where do these intuitions begin, and how are they elaborated over development? We approach these questions through behavioral studies of human infants, preschool children, and school children up to the threshold of formal education in geometry, together with computational models focused on processes of mental simulation.