State of the art learning algorithms in machine learning rely on big sets of labeled examples to reach high accuracy. An example is deep convolutional neural networks for object recognitions wihich are trained on millions of images.

On the contrary humans can learn how to recognize different objects having seen only few examples of them.

A key observation is that many tasks are invariant to transformations of the data: for example the recognition task is invariant to changing in pose and light

since those transformations do not alter the object identity. They can therefore be considered as symmetries of the recognition learning problem .

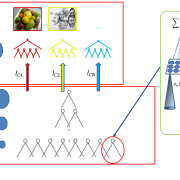

The project is motivated by the hypothesis (proved in the case of group transformations) that the ability of learning to factor out those symmetries can effectively decrease the need of labeled data and therefore by the belief that a model of the visual cortex based on invariant representations can give a better description of how humans learn to recognize objects, The project goals are to characterize a biologically inspired hierarchical image representation wich is selective but at the same time invariant to group transformations and propose a biophysical model of the algorithm based on a simple-complex cells module.