Projects: Social Intelligence

What are the statistical properties of the faces our visual system encounters in everyday life? Which of these properties does the brain use to decide where and when we look in social contexts? Almost all work on how we look at and recognize faces has been done in the inherently non-social environment of the lab. Here, we use state-of-the-art mobile eye tracking and video technology to ascertain how and why people look at faces in real world environments.

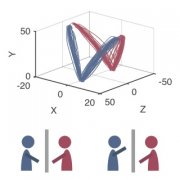

Humans are experts at reading others’ actions. They effortlessly navigate a crowded street or reach for a handshake without grabbing an elbow. This suggests real-time, efficient processing of others’ movements and the ability to predict intended movements. Here using interactive tasks and real-time recording of the bodily movements of two participants we intend to understand how they are able to predict each other’s action goals. Through the analysis of motor movements in interactive situations we will explore when the cues to action goals become available and where in the body they are located.

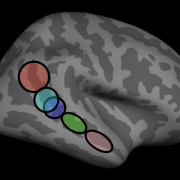

The human superior temporal sulcus (STS) is considered a hub for social perception and cognition, including the perception of faces and human motion, as well as understanding others’ actions, mental states, and language. However, the functional organization of the STS remains debated: is this broad region composed of multiple functionally distinct modules, each specialized for a different process, or are STS subregions multifunctional, contributing to multiple processes? Is the STS spatially organized, and if so, what are the dominant features of this organization?

Human visual experience not only contains physical properties such as shape and object, but also includes a rich understanding of others' mental states, including intention, belief, and desire. This ability is highlighted as "Theory of Mind (ToM)" in social and developmental psychology.

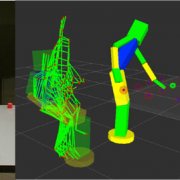

Recognizing actions from dynamic visual input is an important component of social and scene understanding. The majority of visual neuroscience studies, however, focus on recognizing static objects in artificial settings. Here we apply magnetoencaphalography (MEG) decoding and a computational model to a novel data set of natural movies to provide a mechanistic explanation of invariant action recognition in human visual cortex.

Humans effortlessly and pervasively understand themselves and others as autonomous agents who engage in goal-directed actions. Some of these actions are directed to objects and cause changes in their physical states. Other actions are directed to other agents and cause changes in their mental states, primarily through acts of communication. This project investigates the emergence of these abilities through behavioral and computational studies of human infants.

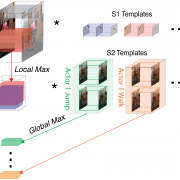

Social cognition is at the core of human intelligence. It is through social interactions that we learn. We believe that social interactions drove much of the evolution of the human brain. Indeed, the neural machinery of social cognition comprises a substantial proportion of the brain. The greatest feats of the human intellect are often the product not of individual brains, but people working together in social groups. Thus, intelligence simply cannot be understood without understanding social cognition. Yet we have no theory or even taxonomy of social intelligence, and little understanding of the underlying brain mechanisms, their development, or the computations they perform. Here we bring developmental, computational, and cognitive neuroscience approaches to bear on a newly tractable component of social intelligence: nonverbal social perception (NVSP), which is the ability to discern rich multidimensional social information from a few seconds of a silent video.

Social cognition is at the core of human intelligence. It is through social interactions that we learn. We believe that social interactions drove much of the evolution of the human brain. Indeed, the neural machinery of social cognition comprises a substantial proportion of the brain. The greatest feats of the human intellect are often the product not of individual brains, but people working together in social groups. Thus, intelligence simply cannot be understood without understanding social cognition. Yet we have no theory or even taxonomy of social intelligence, and little understanding of the underlying brain mechanisms, their development, or the computations they perform. Here we bring developmental, computational, and cognitive neuroscience approaches to bear on a newly tractable component of social intelligence: nonverbal social perception (NVSP), which is the ability to discern rich multidimensional social information from a few seconds of a silent video.