Projects: Exploring Future Directions

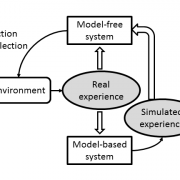

This project explores a cooperative architecture in which a model-based ("goal-directed") reinforcement learning system transfers knowledge to a model-free ("habitual") system via simulation of surrogate experience.

Feedforward circuits have been shown to be very powerful as models of vision. However, these architectures are apparently incapable of dealing with many visual tasks that the human visual system finds simple, such as identifying occluded figures. Data from the Allen Institute for Brain Science Cortical Activity Map (CAM) project may give us the opportunity to explore this question. What is the functional connectivity of cells in mouse V1 ...

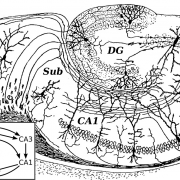

We will develop a model of Bayesian inference over context identity. This framework should provide a unified account of the circumstances under which the rodent hippocampus infers context switching, as well as the neural implementation of this computation. Developing such a theory will help to answer questions like “what happens next”.

The goal of the visual recognition system is to extract abstract features from the retinal image that correlate with real-world objects. The visual system achieves this goal by tuning layer-wise the synaptic weights defining neurons’ receptive fields. One essential feature of the resulting network is that it must be selective but simultaneously invariant: the same feature can appear under changing retinal positions, sizes, rotational angles or partial occlusion. We know that neurons in the adult macaque cortex show selectivity and invariance, but little is known about how they acquire these properties during early development. This is a conceptual gap with important implications for models of visual recognition.

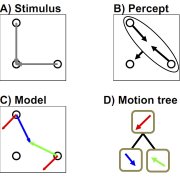

This project explores a Bayesian theory of vector analysis for hierarchical motion perception. The theory takes a step towards understanding how moving scenes are parsed into objects.

Many of the structures humans impose on the world, such as conceptual categories and intuitive theories, are naturally expressible in symbolic or logical form. Despite the fact that learning problems are discrete, relatively noise-free, and easily represented on a computer, the performance of the best symbolic learning algorithms lags far behind that of humans. This project uses a combination of experimentation and modeling to understand and replicate human skill in symbolic domains.

Sound texture perception is believed to be mediated by statistics that provide a compressed summary representation of dense audio signals. We seek to understand texture perception from a normative perspective, and to characterize the conditions in which the brain arrives at statistical representations.

Sensory systems encode natural scenes with representations of increasing complexity. We seek to learn auditory representations of intermediate complexity, to better understand the structure of sound and to generate hypotheses about neural encoding mechanisms.

How do people draw inferences over a large number of possible latent causes? We present a stochastic hypothesis generation algorithm that samples the combinatorially large space of "generate-able" hypotheses and use it to model several biases and features of human statistical inference.

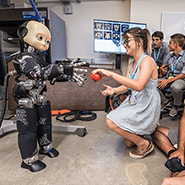

The goal is to maintain diversity and serendipity in the Center. Formed in the middle of CBMM's third year, this research thrust contains new projects, often by new faculty that are not part of the other thrusts, but fit into the overall mission of the Center and may become, in the near future, mainstream research directions for the Center.

The goal is to maintain diversity and serendipity in the Center. Formed in the middle of CBMM's third year, this research thrust contains new projects, often by new faculty that are not part of the other thrusts, but fit into the overall mission of the Center and may become, in the near future, mainstream research directions for the Center.