Competition and cooperation between multiple learning systems

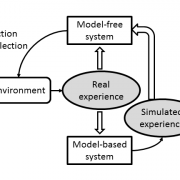

Modern theories of reinforcement learning posit two systems competing for control of behavior: a "model-free" or "habitual" system that learns cached state-action values, and a "model-based" or "goal-directed" system that learns a world model which is then used to plan actions. Many behavioral and neural studies have indicated that these two systems can be dissociated, but recent data cast doubt on a strict separation of the two systems. This project explores, both theoretically and experimentally, a cooperative architecture in which the model-based system transfers knowledge to the model-free system via simulation of surrogate experience.

Associated Research Thrust(s):

Principal Investigators:

Postdoctoral Associates and Fellows: