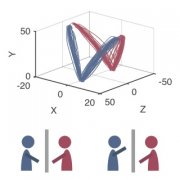

Humans are experts at reading others’ actions. They effortlessly navigate a crowded street or reach for a handshake without grabbing an elbow. This suggests real-time, efficient processing of others’ movements and the ability to predict intended movements. Here using interactive tasks and real-time recording of the bodily movements of two participants we intend to understand how they are able to predict each other’s action goals. Through the analysis of motor movements in interactive situations we will explore when the cues to action goals become available and where in the body they are located.

Social Intelligence

Social cognition is at the core of human intelligence. It is through social interactions that we learn. We believe that social interactions drove much of the evolution of the human brain. Indeed, the neural machinery of social cognition comprises a substantial proportion of the brain. The greatest feats of the human intellect are often the product not of individual brains, but people working together in social groups. Thus, intelligence simply cannot be understood without understanding social cognition. Yet we have no theory or even taxonomy of social intelligence, and little understanding of the underlying brain mechanisms, their development, or the computations they perform. Here we bring developmental, computational, and cognitive neuroscience approaches to bear on a newly tractable component of social intelligence: nonverbal social perception (NVSP), which is the ability to discern rich multidimensional social information from a few seconds of a silent video.

Social cognition is at the core of human intelligence. It is through social interactions that we learn. We believe that social interactions drove much of the evolution of the human brain. Indeed, the neural machinery of social cognition comprises a substantial proportion of the brain. The greatest feats of the human intellect are often the product not of individual brains, but people working together in social groups. Thus, intelligence simply cannot be understood without understanding social cognition. Yet we have no theory or even taxonomy of social intelligence, and little understanding of the underlying brain mechanisms, their development, or the computations they perform. Here we bring developmental, computational, and cognitive neuroscience approaches to bear on a newly tractable component of social intelligence: nonverbal social perception (NVSP), which is the ability to discern rich multidimensional social information from a few seconds of a silent video.