Oct 26, 2021 - 4:00 pm Venue:

MIBR Seminar Room 46-3189 Speaker/s:

Drs. Jie Zheng and Mengmi Zhang

Please note, Dr. Zhang will be presenting remotely via Zoom.

Abstract:

Jie Zheng's presentation:

Title: Neurons that structure memories of ordered experience in human

Abstract: The process of constructing temporal associations among related events is essential to episodic memory. However, what neural mechanism helps accomplish this function remains unclear. To address this question, we recorded single unit activity in humans while subjects performed a temporal order memory task. During encoding, subjects watched a series of clips (i.e., each clip consisted of 4 events) and were later instructed to retrieve the ordinal information of event sequences. We found that hippocampal neurons in humans could index specific orders of events with increased neuronal firings (i.e., rate order cells) or clustered spike timing relative to theta phases (i.e., phase order cells), which are transferrable across different encoding experiences (e.g., different clips). Rate order cells also increased their firing rates when subjects correctly retrieved the temporal information of their preferred ordered events. Phase order cells demonstrated stronger phase precessions at event transitions during encoding for clips whose ordinal information was subsequently correct retrieved. These results not only highlight the critical role of the hippocampus in structuring memories of continuous event sequences but also suggest a potential neural code representing temporal associations among events.

Mengmi Zhang's [virtual] presentation:

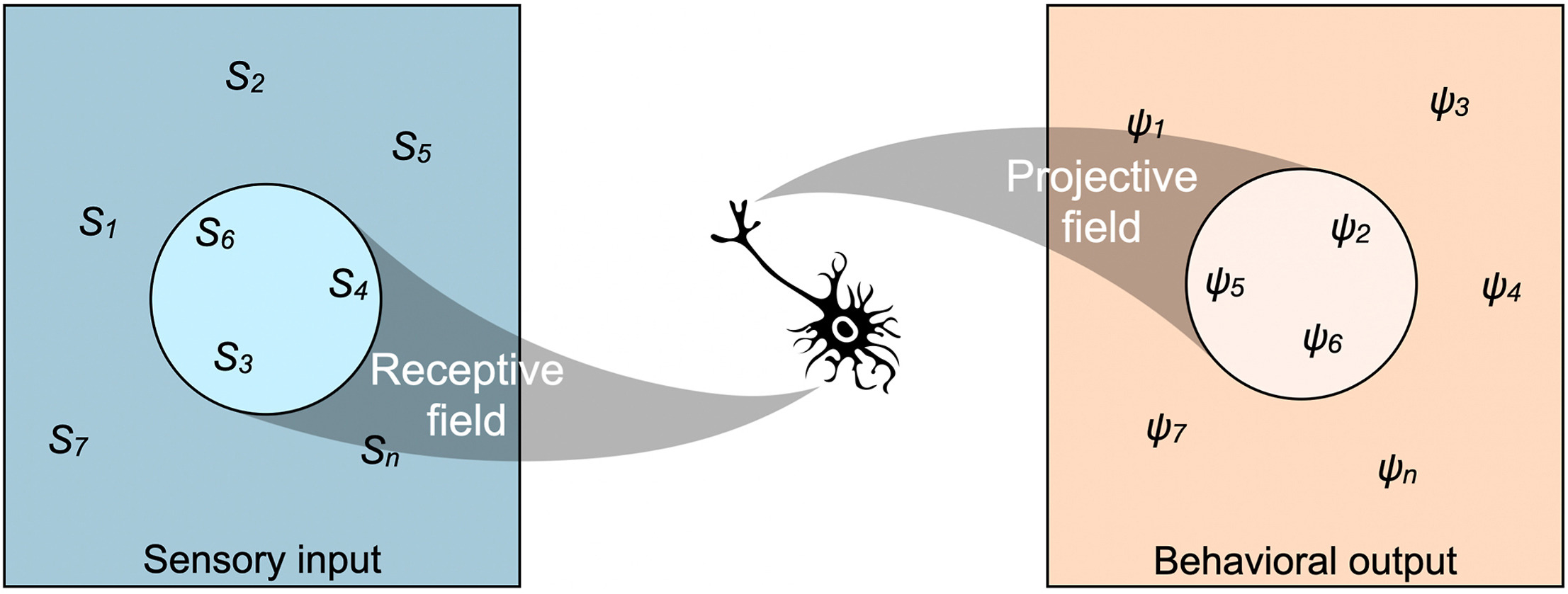

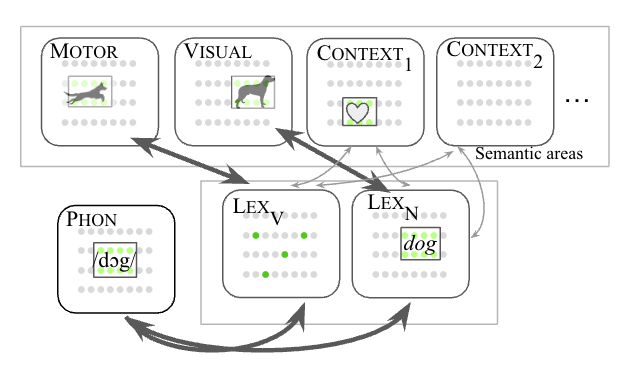

Title: Visual Search Asymmetry: Deep Nets and Humans Share Similar Inherent Biases

Abstract: Visual search is a ubiquitous and often challenging daily task, exemplified by looking for the car keys at home or a friend in a crowd. An intriguing property of some classical search tasks is an asymmetry such that finding a target A among distractors B can be easier than finding B among A. To elucidate the mechanisms responsible for asymmetry in visual search, we propose a computational model that takes a target and a search image as inputs and produces a sequence of eye movements until the target is found. The model integrates eccentricity-dependent visual recognition with target-dependent top-down cues. We compared the model against human behavior in six paradigmatic search tasks that show asymmetry in humans. Without prior exposure to the stimuli or task-specific training, the model provides a plausible mechanism for search asymmetry. We hypothesized that the polarity of search asymmetry arises from experience with the natural environment. We tested this hypothesis by training the model on an augmented version of ImageNet where the biases of natural images were either removed or reversed. The polarity of search asymmetry disappeared or was altered depending on the training protocol. This study highlights how classical perceptual properties can emerge in neural network models, without the need for task-specific training, but rather as a consequence of the statistical properties of the developmental diet fed to the model. Our work will be presented in the upcoming Neurips conference, 2021. All source code and stimuli are publicly available this https URL

---

The Fall 2021 CBMM Research Meetings will be hosted in a hybrid format. Please see the information included below regarding attending the event either in-person or remotely via Zoom connection

Details to attend talk remotely via Zoom:

Zoom connection link: https://mit.zoom.us/j/91580119583?pwd=Z212ZjM3MFNFSHNTYlcyaUJZbjQrQT09

Guidance for attending in-person:

MIT attendees:

MIT attendees will need to be registered via the MIT COVIDpass system to have access to MIT Building 46.

Please visit URL https://covidpass.mit.edu/ for more information regarding MIT COVIDpass.

Non-MIT attendees:

MIT is currently welcoming visitors to attend talks in person. All visitors to the MIT campus are required to follow MIT COVID19 protocols, see URL https://now.mit.edu/policies/campus-access-and-visitors/. Specifically, visitors are required to wear a face-covering/mask while indoors and use the new MIT TIM Ticket system for accessing MIT buildings. Per MIT’s event policy, use of the Tim Tickets system is required for all indoor events; for information about this and other current MIT policies, visit MIT Now.

Link to this event's MIT TIM TICKET: https://tim-tickets.atlas-apps.mit.edu/o1T9dFk9TTcDfzKF8

To access MIT Bldg. 46 with a TIM Ticket, please enter the building via the McGovern/Main Street entrance - 524 Main Street (on GPS). This entrance is equipped with a QR reader that can read the TIM Ticket. A map of the location of, and an image of, this entrance is available at URL: https://mcgovern.mit.edu/contact-us/

General TIM Ticket information:

A visitor may use a Tim Ticket to access Bldg. 46 any time between 6 a.m. and 6 p.m., M-F

A Tim Ticket is a QR code that serves as a visitor pass. A Tim Ticket, named for MIT’s mascot, Tim the Beaver, is the equivalent of giving someone your key to unlock a building door, without actually giving up your keys.

This system allows MIT to collect basic information about visitors entering MIT buildings while providing MIT hosts a convenient way to invite visitors to safely access our campus.

Information collected by the TIM Ticket:

- Name

- Phone number

- Email address

- COVID-19 vaccination status (i.e., whether fully vaccinated or exempt)

- Symptom status and wellness information for the day of visit

The Tim Tickets system can be accessed by invited guests through the MIT Tim Tickets mobile application (available for iOS 13+ or Android 7+) or on the web at visitors.mit.edu.

Visitors must acknowledge and agree to terms for campus access, confirm basic contact information, and submit a brief attestation about health and vaccination status. Visitors should complete these steps at least 30 minutes before scanning into an MIT building.

For more information on the TIM Tickets, please visit https://covidapps.mit.edu/visitors#for-access

Organizer:

Hector Penagos Organizer Email:

cbmm-contact@mit.edu