When and How CNNs Generalize to Out-of-Distribution Category-Viewpoint Combinations [video]

Date Posted:

February 21, 2022

Date Recorded:

February 16, 2022

CBMM Speaker(s):

Xavier Boix ,

Spandan Madan ,

Tomotake Sasaki Speaker(s):

Helen Ho

All Captioned Videos Publication Releases CBMM Research VIDEO

Description:

CBMM researchers and authors, Spandan Madan, Xavier Boix, Helen Ho, and Tomotake Sasaki discuss their latest research that was published in Nature Machine Intelligence.

[MUSIC PLAYING] SPANDAN MADAN: I'm Spandan Madan. I'm a PhD student at the Visual Computing Group at Harvard.

XAVIER BOIX: I'm Xavier Boix. I'm a research scientist at the Brain and Cognitive Science Department at MIT.

SPANDAN MADAN: In the real world, we often see objects from multiple different viewpoints. So you can see a chair from the side, from the top, from the bottom, and we can recognize them easily as humans. But recent works have shown that neural networks can get pretty badly impacted when you train them on a dataset which is biased in terms of what viewpoints they're shown and then test it outside that bias.

XAVIER BOIX: Dataset bias is when you have a training set that contains objects only in a few viewpoints or illumination conditions or background conditions. And then at test time, you have examples which are not contained in the training set, objects in viewpoints that you never seen, backgrounds you never seen, illumination that you have never seen. Neural networks get severely affected when you test them in these novel conditions.

SPANDAN MADAN: And we were quite intrigued to study why this happens and what are the mechanisms that drive this kind of behavior. But one of the big challenges in studying this kind of behavior is that we can't control bias. So most of the existing datasets are images that we [INAUDIBLE] from the internet. So we can't control the kind of viewpoints that they're being tested on.

XAVIER BOIX: So we approach this problem with the mindset of a neuroscientist. We identified several dimensions of the problem that can impact the behavior of the neural network and the dataset bias. And we design controlled experiments to study them.

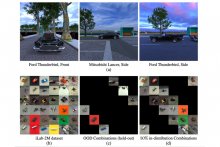

SPANDAN MADAN: Which is where we started using computer graphics, because that allowed us to create custom 3D worlds. And we could control the kind of viewpoints and the category combinations that we were showing during training.

And then by controlling the amount of bias and the kind of bias, we were able to understand or study how these networks are impacted and how they can be improved. So this is where our collaborators came in who enabled us to build this 3D engine that we then used to create a dataset.

HELEN HO: My colleague Nishchal Bhandari really laid the foundation for this graphics pipeline for image dataset generation. I came in to really try to expand it and give researchers more flexibility. Spandan had a bunch of questions about things like, where can we render the cars from perspective wise, or what if we render the cars with different colors, different materials? So building in that flexibility and those tooling to give him that control is really one of the main goals of this project.

XAVIER BOIX: The first thing we found is that actually networks are able to overcome this bias. But in some conditions in the training set, you need the training set has enough diversity, has diverse amount of viewpoints, and illuminations, and backgrounds. And then the networks gain the ability to generalize.

SPANDAN MADAN: Yeah, and in fact, one of the experiments that surprised us a lot was that we did an experiment where we showed the network something very similar to what it's going to get tested on. But instead of that performing better, what worked was, even if it's dissimilar stuff, if you show a lot of diversity, the network actually performs better. So I guess we found that even dissimilar diversity is better than less diversity but similar data.

XAVIER BOIX: Yeah, and also amount of training data, so amount of training data, we thought it would be also very important. But here it seemed to be less relevant compared to data diversity. So we saw that more training data improves the ability to generalize up to a certain point. But data diversity is what actually helps generalizing beyond the training distribution.

So another exciting finding was when we train networks to recognize both object category and object viewpoint. So here there are two options, which is to train one network, use one network to do both task or to use two separate networks one for category and the other for viewpoint. And what we found is that when there is dataset bias, it's much, much better to use two separate networks than only one of them.

SPANDAN MADAN: Right, and this was surprising, because historical literature suggests that if you want to do two tasks, then it can help to learn the two tasks simultaneously in the same network. But all of these works don't look at it as a bias. And we show that if there is dataset bias at play, then this is no longer true. It's better use the two network separately, because they outperform the shared one.

XAVIER BOIX: So all these findings were exciting because it opens new applications to overcome dataset bias. And for example our collaborators at Fujitsu and others will benefit from that.

TOMOTAKE SASAKI: In real business scenarios, it is very difficult or perhaps infeasible to collect a perfect training dataset that covers all possible conditions. We should assume that the training data are biased and deep networks encounter global conditions after deployment.

So the findings in this study are very important from engineering and application viewpoints, as well as the scientific one. In fact, the study gave us many hints on how to develop better deep learning methods. We have been working together throughout this goal. And this effort has already begun to bear fruit. We would like to apply the developed method to real-world applications, for example, traffic monitoring and medical image processing.

SPANDAN MADAN: So going forward, we're quite excited about this approach of controlling the kind of dataset that [INAUDIBLE] are trained on, and using computer graphics to control the training and the testing distribution, and then understanding how networks are impacted by these data distributions.

XAVIER BOIX: Yeah, definitely. And studying deep neural networks with a lens of a neuroscientist has been shown to be successful in this case. And we hope to bring the same approach to other more complex domains and other aspects of deep networks. And we are thankful for collaborating here at the Center for Brains, Minds, and Machines, because this approach has been directly inspired by our collaborators here.

Associated Research Module:

have an interactive transcript feature enabled, which appears below the video when playing. Viewers can search for keywords in the video or click on any word in the transcript to jump to that point in the video. When searching, a dark bar with white vertical lines appears below the video frame. Each white line is an occurrence of the searched term and can be clicked on to jump to that spot in the video.

have an interactive transcript feature enabled, which appears below the video when playing. Viewers can search for keywords in the video or click on any word in the transcript to jump to that point in the video. When searching, a dark bar with white vertical lines appears below the video frame. Each white line is an occurrence of the searched term and can be clicked on to jump to that spot in the video.