Tiny amounts of artificial noise can fool neural networks, but not humans. Some researchers are looking to neuroscience for a fix.

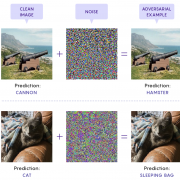

Artificial intelligence sees things we don’t — often to its detriment. While machines have gotten incredibly good at recognizing images, it’s still easy to fool them. Simply add a tiny amount of noise to the input images, undetectable to the human eye, and the AI suddenly classifies school buses, dogs or buildings as completely different objects, like ostriches.

In a paper posted online in June, Nicolas Papernot of the University of Toronto and his colleagues studied different kinds of machine learning models that process language and found a way to fool them by meddling with their input text in a process invisible to humans. The hidden instructions are only seen by the computer when it reads the code behind the text to map the letters to bytes in its memory. Papernot’s team showed that even tiny additions, like single characters that encode for white space, can wreak havoc on the model’s understanding of the text. And these mix-ups have consequences for human users, too — in one example, a single character caused the algorithm to output a sentence telling the user to send money to an incorrect bank account.

These acts of deception are a type of attack known as adversarial examples, intentional changes to an input designed to deceive an algorithm and cause it to make a mistake. This vulnerability achieved prominence in AI research in 2013 when researchers deceived a deep neural network, a machine learning model with many layers of artificial “neurons” that perform computations.

For now, we have no foolproof solutions against any medium of adversarial examples — images, text or otherwise. But there is hope. For image recognition, researchers can purposely train a deep neural network with adversarial images so that it gets more comfortable seeing them. Unfortunately, this approach, known as adversarial training, only defends well against adversarial examples the model has seen. Plus, it lowers the accuracy of the model on non-adversarial images, and it’s computationally expensive. Recently, the fact that humans are so rarely duped by these same attacks has led some scientists to look for solutions inspired by our own biological vision.

“Evolution has been optimizing many, many organisms for millions of years and has found some pretty interesting and creative solutions,” said Benjamin Evans, a computational neuroscientist at the University of Bristol. “It behooves us to take a peek at those solutions and see if we can reverse-engineer them.”

Focus on the Fovea

The first glaring difference between visual perception in humans and machines starts with the fact that most humans process the world through our eyes, and deep neural networks don’t. We see things most clearly in the middle of our visual field due to the location of our fovea, a tiny pit centered behind the pupil in the back of our eyeballs. There, millions of photoreceptors that sense light are packed together more densely than anywhere else.

“We think we see everything around us, but that’s to a large extent an illusion,” said Tomaso Poggio, a computational neuroscientist at the Massachusetts Institute of Technology and director of the Center for Brains, Minds and Machines...

Read the full story on Quantamagazine.com using the link below.