The Center for Brains, Minds and Machines is well-represented at the thirty-fourth Conference on Neural Information Processing Systems (NeurIPS 2020).

IBM-MIT launch new AI challenge to push the limits of object recognition models

Below, you will find some of the listings to papers/proceedings/posters accepted and accompanying coverage:

CBMM is proud to have hosted this year's Shared Visual Representations in Human & Machine Intelligence, 2020 NeurIPS Workshop, Dec. 12, 2020. Videos now available.

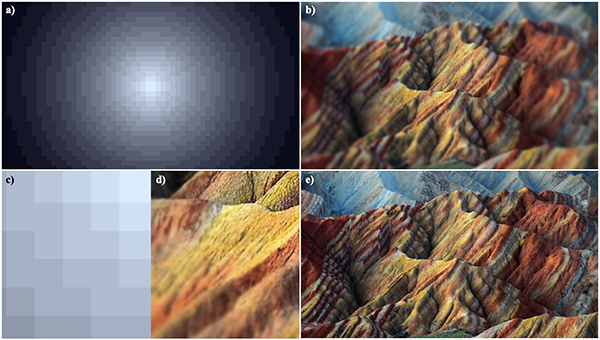

"Simulating a Primary Visual Cortex at the Front of CNNs Improves Robustness to Image Perturbations"

Dapello, J, Marques, T, Schrimpf, M, Geiger, F, Cox, D, DiCarlo, JJ, Advances in Neural Information Processing Systems 33 pre-proceedings (NeurIPS 2020)

- [article] "Neuroscientists find a way to make object-recognition models perform better", MIT News

- [video] Simulating a Primary Visual Cortex at the Front of CNNs Improves Robustness to Image Perturbations

- [code] Github: https://github.com/dicarlolab/vonenet

- [article] "Is neuroscience the key to protecting AI from adversarial attacks?", Tech Talks

- [poster and video] NeurIPS conference website

- [article] "IBM and MIT researchers find a new way to prevent deep learning hacks", IBM

“CUDA-Optimized real-time rendering of a Foveated Visual System”

E. Malkin, Deza, A., and Poggio, T. A., Shared Visual Representations in Human and Machine Intelligence (SVRHM) workshop at NeurIPS 2020.

- [code] https://github.com/ElianMalkin/foveate_blockwise

- [video] https://www.youtube.com/watch?v=Rr5oaiIsVbA

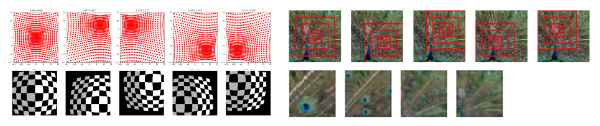

“Biologically Inspired Mechanisms for Adversarial Robustness”

M. Vuyyuru Reddy, Banburski, A., Pant, N., and Poggio, T., NeurIPS 2020 Poster Session6 , Dec. 10 from 12:00-2:00pm.

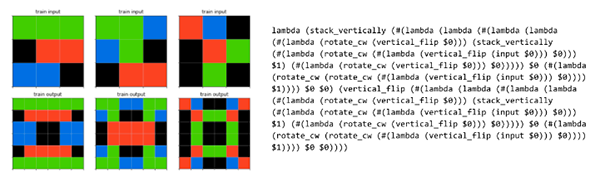

“Dreaming with ARC”

A. Banburski, Gandhi, A., Alford, S., Dandekar, S., Chin, P., and Poggio, T., Learning Meets Combinatorial Algorithms workshop at NeurIPS 2020.

![Illustration of our synthesis-based rule learner and comparison to previous work. A)Previous work [9]: Support examples are encoded into an external neural memory. A query outputis predicted by conditioning on the query input sequence and interacting with the external memoryvia attention. B) Our model: Given a support set of input-output examples, our model produces adistribution over candidate grammars. We sample from this distribution, and symbolically checkconsistency of each sampled grammar against the support set until a grammar is found which satisfiesthe input-output examples in the support set. This approach allows much more effective search thanselecting the maximum likelihood grammar from the network.](/sites/default/files/images/rulesynthesis.png)

"Learning Compositional Rules via Neural Program Synthesis"

Nye, M, Solar-Lezama, A, Tenenbaum, JB, Lake, BM, Advances in Neural Information Processing Systems 33 pre-proceedings (NeurIPS 2020)

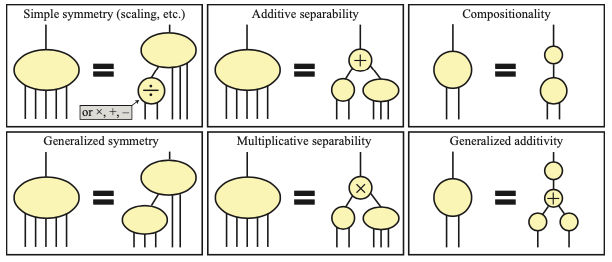

“AI Feynman 2.0: Pareto-optimal symbolic regression exploiting graph modularity”

S. - M. Udrescu, Tan, A., Feng, J., Neto, O., Wu, T., and Tegmark, M., in Advances in Neural Information Processing Systems 33 pre-proceedings (NeurIPS 2020).

- GitHub: https://github.com/SJ001/AI-Feynman

- Readthedocs: https://ai-feynman.readthedocs.io/en/latest/

- Database: https://space.mit.edu/home/tegmark/aifeynman.html

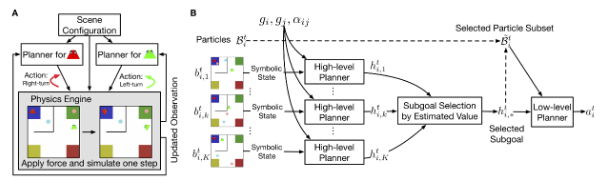

“PHASE: PHysically-grounded Abstract Social Eventsfor Machine Social Perception”

, in Shared Visual Representations in Human and Machine Intelligence (SVRHM) workshop at NeurIPS 2020.

- [video] supplemental materials

“Learning abstract structure for drawing by efficient motor program induction”

, in Advances in Neural Information Processing Systems 33 pre-proceedings (NeurIPS 2020),