In September 2014, Siemens Healthcare generously established the CBMM Siemens Graduate Fellowship. This fellowship provides support, for one academic year, to an MIT graduate student whose research bridges two of the main CBMM disciplines (computer science, cognitive science, and neuroscience) and contributes to CBMM goals of furthering our understanding of human intelligence and engineering new methods based on that understanding. During the funded academic year, the CBMM Siemens Fellow will collaborate closely with Siemens Research, visiting the laboratory and completing a month-long summer internship at Siemens Research Labs in Princeton, NJ.

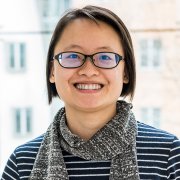

CBMM is happy to announce that Yen-Ling Kuo has been named the CBMM Siemens Graduate Fellow. Yen-Ling is a PhD student in Computer Science and Artificial Intelligence Laboratory (CSAIL), working with CBMM researchers Boris Katz, Josh Tenenbaum, and Andrei Barbu. She received her Bachelor’s and Master’s degrees in Computer Science and Information Engineering from National Taiwan University in Taipei. Yen-Ling’s research interests lie at the intersection of Artificial Intelligence and Cognitive Science and driven by a desire to understand how intelligence works and how it can be integrated into the real-world applications. Specifically, she is interested in bridging robotic planning and human action understanding using probabilistic models and common sense reasoning. During this fellowship, she will be focusing on applying these methods to develop a deeper understanding of vision and language system that include an understanding of the plans of agents being discussed and observed. Yen-Ling was awarded the Greater China Computer Science Fellowship in 2016 as well as the Best Master Thesis Award by the Taiwanese Association of Artificial Intelligence in 2012.

Yen-Ling Kuo’s Research Summary:

Understanding what people are doing or trying to do comes naturally to humans but we do not have a complete computational model for this ability even though it is critical to many AI applications. For example, knowing that a person is trying to pick up a very heavy box and failing to do so is what would allow a robot in the home to provide assistance. Today’s computer vision systems track the position of the object and of the person trying to understand how they are moving, but they do not have access to this deeper level of understanding. To endow machines with this ability to see beneath the surface, and simultaneously to understand how humans have and use this ability, we are employing an efficient new approach to inverse planning. Roughly speaking, inverse planning is an idea that to understand what someone is doing, you have to put yourself in their shoes and imagine what you might be thinking if you were that person. This approach, while attractive and elegant, is often very computationally challenging; as a result, most of the research on this topic has been conducted in simulation divorced from real-world observations. By adopting and extending efficient planners designed for controlling robots, we are developing approaches to scale inverse planning to larger domains, including plans which are described in natural language, with real-world observations coming from computers vision systems. This will lead to computer vision systems which are more capable and human-like, to more efficient robotic planners, and to deeper understanding of how humans plan.