Written by: Dan Gutfreund

Exactly one year ago, a team of researchers from the MIT-IBM Watson AI Lab and the Center for Brains, Minds, and Machines presented a new dataset, called ObjectNet, for testing object recognition models in images.

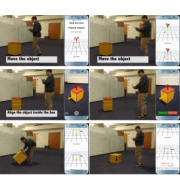

As opposed to the standard approach for large scale dataset construction in the field of computer vision, which relies on scraping images off the internet and crowdsourcing the labeling process, ObjectNet data was collected by crowdsourcing the creation of the images themselves. Specifically, the team designed an approach to collect data on crowdsourcing platforms such as Amazon Mechanical Turk in a way that controls for several visual aspects in the images such as object pose, its location (or context), as well as the camera angle. This is important because in popular benchmark datasets such as ImageNet, objects tend to appear in typical poses (e.g. chairs will be standing on their legs and not laying on their side) and expected contexts (e.g. forks will appear in the kitchen or on dining tables but not in the bathroom sink). This inherently injects biases into such datasets which are removed in ObjectNet by introducing the controls.

In a paper published in NeurIPS 2019, the team showed that object recognition models trained on ImageNet, performed poorly on ObejctNet and were significantly less accurate when compared to humans or to the performance of the same models on the ImageNet test set. This suggests that these models learn and depend on the abovementioned biases and therefore cannot be trusted to generalize to real-world settings, for example, in a perception module for a robot operating in a typical household.

Today, we are announcing a challenge for the computer vision community to develop robust models for object recognition, demonstrating accurate predictions on ObjectNet images. The challenge will run in a form of an open competition with a public leaderboard and the winners will be announced in June during CVPR 2021.

ObjectNet: a dataset of real-world images created by researchers at IBM and MIT to test the limit of object recognition models.

The challenge is unique in two important aspects, differentiating it from typical classification or prediction challenges in computer vision. First, we do not provide any training set, and second, the test set is completely hidden, not only the labels, but also the images themselves. The only information that is available to participants is the list of object classes that appear in the dataset. These two aspects necessarily demand that successful models will be highly robust and generalizable. In order to support the hidden data requirement, participants will be asked to upload their models packaged in a Docker container. A platform that our team developed, leverages EvalAI elements in the front end and implements a back end that runs in the IBM Cloud. Using this platform, models containerized in Docker images will run on the ObjectNet images; the system will compare the results to the ground truth labels, report accuracy metrics to the model developers and update the leaderboard.

This model-to-data approach as a way to run challenges is interesting in its own right and holds tremendous promise for the future as it provides means to run public challenges on private data which cannot be shared due to business, legal or personal privacy. The ObjectNet challenge serves as a proof-of-concept for this approach and our hope is to run many more challenges in the future leveraging cutting-edge research to solve problems which otherwise would not be possible due to data privacy, e.g. in the industrial, financial and defense domains.

The ObjectNet challenge will be launched on December 14th, 2020. We encourage research teams from all over the world to participate.

See the full article on IBM's website using the link below.