Dec 2, 2015 - 4:30 pm Venue:

Harvard Northwest Building, Room 243 Address:

52 Oxford St., Cambridge, MA 02138

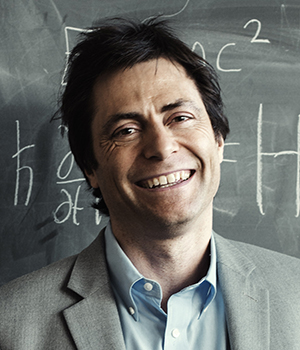

Speaker/s:

Shimon Ullman, Boris Katz

Thrust 3 Projects

Yevgeni Berzak - Human Language Learning

Abstract: Linguists and psychologists have long been studying cross-linguistic transfer, the influence of native language properties on linguistic performance in a foreign language. In this work we provide empirical evidence for this process in the form of a strong correlation between language similarities derived from structural features in English as Second Language (ESL) texts and equivalent similarities obtained from the typological features of the native languages. We leverage this finding to recover native language typological similarity structure directly from ESL text, and perform prediction of typological features in an unsupervised fashion with respect to the target languages. Our method achieves results that are highly competitive with those obtained using equivalent methods that rely on typological resources. Finally, we outline ongoing work that utilizes transfer phenomena for prediction of grammatical error distributions and syntactic parsing for learner language.

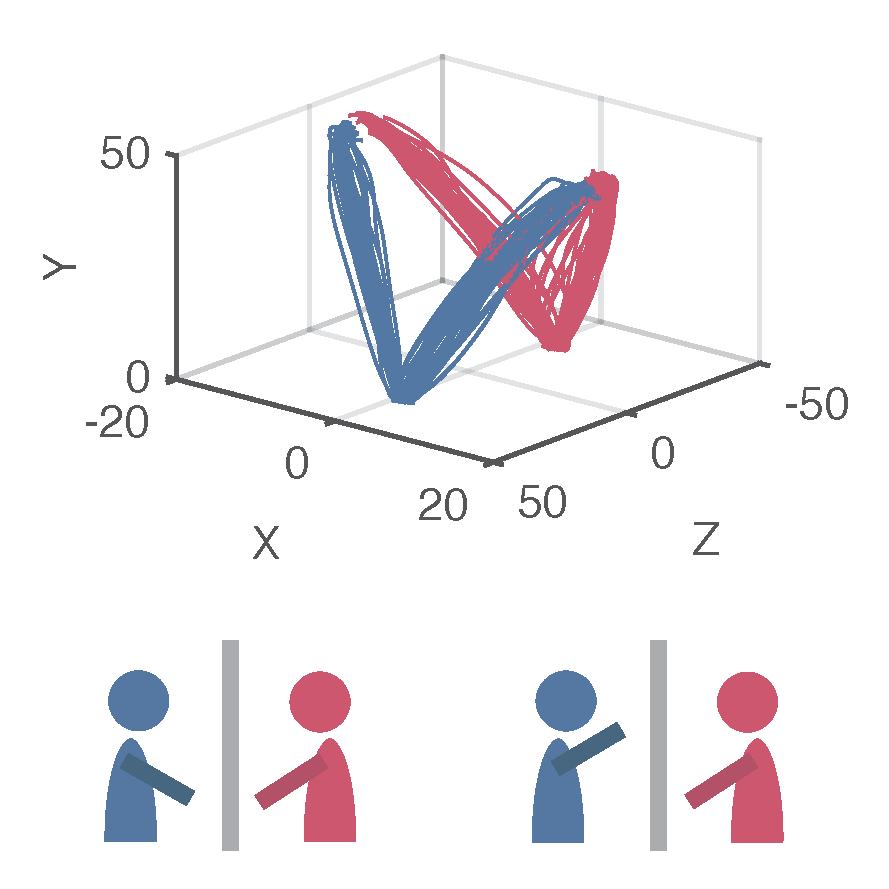

Andre Barbu - Disambiguation and grounded Question Answering

Abstract: Humans perform many language tasks that are grounded in perception: description, language acquisition, disambiguation, question answering, etc. These tasks share common underlying principles and we show how a single mechanism can be repurposed to address many of them. In disambiguation we show have an ambiguous sentence with multiple interpretations can be understood in the context of a video depicting one of those interpretations. In question answering we show have a question can be answered given a video by repurposing a vision-language sentence generation mechanism. Our methods share data and do not need to be retrained for every novel task. We briefly discuss extensions to other vision-language tasks and to language-only tasks such as translation that can be potentially solved through perception.

Candace Ross - Predicting the Future

Abstract: We present a model which predicts the motion of objects in still images. This predictive ability is important to humans; our reflexes are relatively slow and if we were limited to always responding to the information at hand we would be unable to navigate complex environments and avoid dangers. Our goal is to bring to bear an insight from cognitive science to vision: humans can reliably predict to motion of objects from still images. We demonstrate how a model can learn this ability in an unsupervised fashion by observing YouTube videos. Our model employs a deep neural network to predict dense optical flow given single frames. This approach is competitive with standard optical flow algorithms such as Lucas-Kanade, which require videos not just images. This work provides a new foundation for many techniques such as learning to automatically segment objects, improved robotic navigation, extending video action recognition to images, and the development of a cognitively-motivated attention mechanism.

Presentation: CBMM Thrust 3 research projects