We take advantage of a rare opportunity to interrogate the neural signals underlying language processing in the human brain by invasively recording field potentials from the human cortex in epileptic patients. These signals provide high spatial and temporal resolution and therefore are ideally suited to investigate language processing, a question that is difficult to study in animal models. This project examines how cortical signals represent different aspects of language processing including basic word properties (length, type, semantics), grammatical structures and extraction of meaning.

Vision and Language

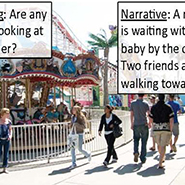

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.

The goal of this research is to combine vision with aspects of language and social cognition to obtain complex knowledge about the environment. To obtain a full understanding of visual scenes, computational models should be able to extract from the scene any meaningful information that a human observer can extract about actions, agents, goals, scenes and object configurations, social interactions, and more. We refer to this as the ‘Turing test for vision,’ i.e., the ability of a machine to use vision to answer a large and flexible set of queries about objects and agents in an image in a human-like manner. Queries might be about objects, their parts, and spatial relations between objects, actions, goals, and interactions. Understanding queries and formulating answers requires interactions between vision and natural language. Interpreting goals and interactions requires connections between vision and social cognition. Answering queries also requires task-dependent processing, i.e., different visual processes to achieve different goals.