Apr 2, 2019 - 4:00 pm Venue:

MIT Building 46-3002 (Singleton Auditorium) Address:

43 Vassar St, Cambridge, MA 02139

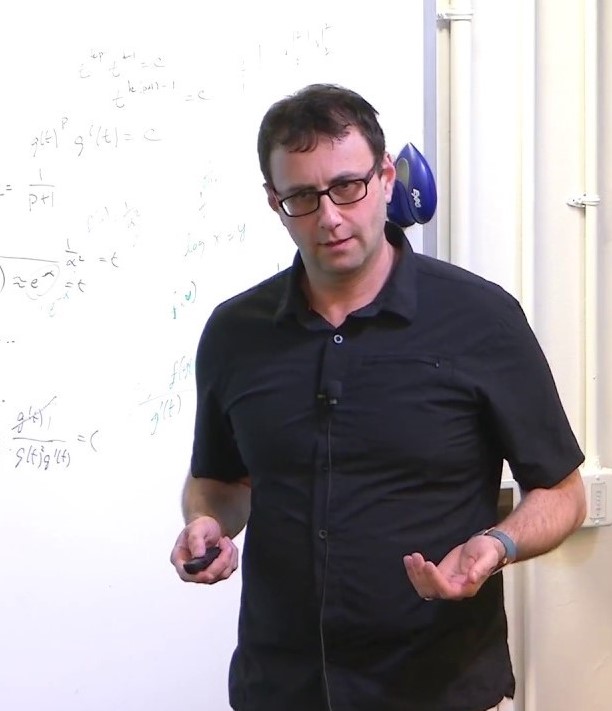

Speaker/s:

Dr. Jon Bloom, Broad Institute

Abstract: When trained to minimize reconstruction error, a linear autoencoder (LAE) learns the subspace spanned by the top principal directions but cannot learn the principal directions themselves. In this talk, I'll explain how this observation became the focus of a project on representation learning of neurons using single-cell RNA data. I'll then share how this focus led us to a satisfying conversation between numerical analysis, algebraic topology, random matrix theory, deep learning, and computational neuroscience. We'll see that an L2-regularized LAE learns the principal directions as the left singular vectors of the decoder, providing a simple and scalable PCA algorithm related to Oja's rule. We'll use the lens of Morse theory to smoothly parameterize all LAE critical manifolds and the gradient trajectories between them; and see how algebra and probability theory provide principled foundations for ensemble learning in deep networks, while suggesting new algorithms. Finally, we'll come full circle to neuroscience via the "weight transport problem" (Grossberg 1987), proving that L2-regularized LAEs are symmetric at all critical points. This theorem provides local learning rules by which maximizing information flow and minimizing energy expenditure give rise to less-biologically-implausible analogues of backproprogation, which we are excited to explore in vivo and in silico. Joint learning with Daniel Kunin, Aleksandrina Goeva, and Cotton Seed.

Project resources: https://github.com/danielkunin/Regularized-Linear-Autoencoders

Short Bio: Jon Bloom is an Institute Scientist at the Stanley Center for Psychiatric Research within the Broad Institute of MIT and Harvard. In 2015, he co-founded the Models, Inference, and Algorithms Initiative and a team (Hail) building distributed systems used throughout academia and industry to uncover the biology of disease. In his youth, Jon did useless math at Harvard and Columbia and learned useful math by rebuilding MIT’s Intro to Probability and Statistics as a Moore Instructor and NSF postdoc. These days, he is exuberantly surprised to find the useless math may be useful after all.

Organizer:

Hector Penagos Organizer Email:

cbmm-contact@mit.edu