Home Page Spotlights

Study finds infants try harder after seeing adults struggle to achieve a goal. by Anne Trafton | MIT News Office

The 2017 nomination process is underway. The CBMM thrust leaders will nominate student candidates and a nominating committee will then select the 2017 Siemens Fellow. Read more about this wonderful collaboration between CBMM and Siemens Research.

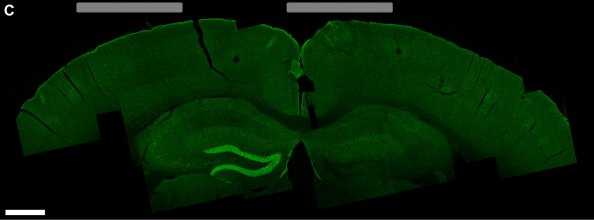

From the Livingstone lab, an important result in the requirements for the formation of face domains was just published in Nature Neuroscience.

Congratulations to Thomas O'Connell and Kasper Vinken for a successful completion of this year's BMM Summer Course. And a gracious thanks to Google X for enabling Thomas and Kasper's participation.

Congratulations to Heather Kosakowski for a successful completion of this year's BMM Summer Course. And a gracious thanks to the Hidary Foundation for enabling Heather's participation.

9.523/6.861 Science of Intelligence explores the problem of intelligence- its nature, how it is produced by the brain and how it could be replicated in machines -with an approach that integrates computational modeling, neuroscience and cognitive science.

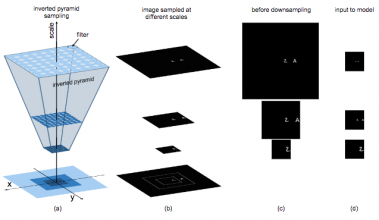

Crowding is a visual effect suffered by humans, in which an object that can be recognized in isolation can no longer be recognized when other objects, called flankers, are placed close to it. In this work, we study the effect of crowding in artificial...

In this talk, Kevin Murphy summarizes some recent work in his group which is related to visual scene understanding and "grounded" language understanding.

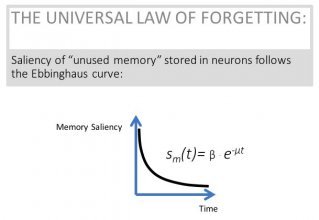

"How important are Undergraduate College Academics after graduation? How much do we actually remember after we leave the college classroom, and for how long?..."

Electrodes placed on the scalp could help patients with brain diseases. ... The new, noninvasive approach could make it easier to adapt deep brain stimulation to treat additional disorders, the researchers say.

Pulses of electricity delivered to the brain can help patients with Parkinson’s disease, depression, obsessive-compulsive disorder and possibly other conditions. But the available methods all have shortcomings: They either involve the risks of surgery, fr

Scientific American - Neuroscience | Bret Stetka | May 18, 2017

The properties of a representation, such as smoothness, adaptability, generality, equivari- ance/invariance, depend on restrictions imposed during learning. In this paper, we propose using data symmetries, in the sense of equivalences under transforma...

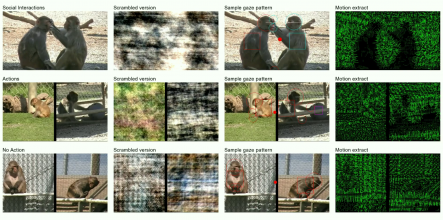

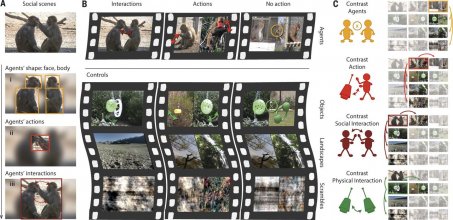

A dedicated network for social interaction processing in the primate brain, Science, May 19, 2017

Today: Kevin Murphy (Google Research) will discuss recent work related to visual scene understanding and "grounded" language understanding. Talk: 4pm, May 26th, MIT Singleton Auditorium (46-3002)

![Seeing faces is necessary for face-domain formation [Nature] Seeing faces is necessary for face-domain formation [Nature]](https://cbmm.mit.edu/sites/default/files/styles/all_home_page_spotlights_/public/home-feature/seeing-faces.jpg?itok=v0XOStkS)