May 19, 2020 - 2:30 pm

We’ve built machines that are capable of incredible feats, yet still they have nothing on a baby.

By Will Knight

Elizabeth Spelke, a cognitive psychologist at Harvard, has spent her career testing the world’s most sophisticated learning system—the mind of a baby.

Gurgling infants might seem like no match for artificial intelligence. They are terrible at labeling images, hopeless at mining text, and awful at videogames. Then again, babies can do...

May 19, 2020 - 2:00 pm

Noga Zaslavsky

Title: Efficient compression and linguistic meaning in humans and machines

Abstract: In this talk, I will argue that efficient compression may provide a fundamental principle underlying the human capacity to communicate and reason about meaning, and may help to inform machines with similar...

May 5, 2020 - 4:00 pm

Zoom

Max Tegmark, MIT

Title: AI for physics & physics for AI

Abstract: After briefly reviewing how machine learning is becoming ever-more widely used in physics, I explore how ideas and methods from physics can help improve machine learning, focusing on automated discovery of mathematical formulas from data. I...

April 28, 2020 - 2:00 pm

Zoom

Youssef Mroueh, MIT-IBM Watson AI lab

Title of the talk: Sobolev Independence Criterion: Non-Linear Feature Selection with False Discovery Control.

Abstract: In this talk I will show how learning gradients help us designing new non-linear algorithms for feature selection, black box sampling and also, in understanding neural...

April 21, 2020 - 4:00 pm

Luca Carlone

Abstract:

Spatial perception has witnessed an unprecedented progress in the last decade. Robots are now able to detect objects and create large-scale maps of an unknown environment, which are crucial capabilities for navigation and manipulation. Despite these advances, both researchers and...

Spatial perception has witnessed an unprecedented progress in the last decade. Robots are now able to detect objects and create large-scale maps of an unknown environment, which are crucial capabilities for navigation and manipulation. Despite these advances, both researchers and...

April 21, 2020 - 12:15 pm

Survey from the Saxe Lab aims to measure the toll of social isolation during the Covid-19 pandemic.

Julie Pryor | McGovern Institute for Brain Research

After being forced to relocate from their MIT dorms during the Covid19 crisis, two members of Professor Rebecca Saxe's lab at the McGovern Institute for Brain Research are now applying their psychology skills to study the impact of mandatory relocation and social isolation on mental health.

“When...

April 18, 2020 - 9:45 am

Are we really going to bet that we can go back to life as normal without proper coronavirus tracking in place?

By Thomas L. Friedman

“LIBERATE MINNESOTA!” “LIBERATE MICHIGAN!” “LIBERATE VIRGINIA.”

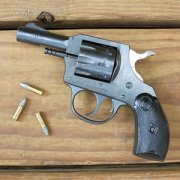

With these three short tweets last week, President Trump attempted to kick off the post-lockdown phase of America’s coronavirus crisis. It should be called: “American Russian roulette: The Covid-19 version.’’

What Trump was saying with those tweets was...

April 16, 2020 - 11:00 am

Altruism toward strangers grows out of the emotional attachment between babies and their caregivers.

By Alison Gopnik

The last few weeks have seen extraordinary displays of altruism. Ordinary people have transformed their lives—partly to protect themselves and the people they love from the Covid-19 pandemic, but also to help other people they don’t even know. But where does altruism come from? How could evolution by natural selection produce...

April 14, 2020 - 2:00 pm

Zoom

Tomaso Poggio (CBMM), Mikhail Belkin (Ohio State University), Constantinos Daskalakis (CSAIL), Gil Strang (...

Abstract:

Developing theoretical foundations for learning is a key step towards understanding intelligence. Supervised learning is a paradigm in which natural or artificial networks learn a functional relationship from a set of n input-output training examples. A main challenge for the theory is...

April 14, 2020 - 10:00 am

MIT professors Sabine Iatridou, Jonathan Gruber, and Rebecca Saxe have been selected to pursue their work “under the freest possible conditions.”

Julie Pryor | McGovern Institute for Brain Research

MIT faculty members Sabine Iatridou, Jonathan Gruber, and Rebecca Saxe are among 175 scientists, artists, and scholars awarded 2020 fellowships from the John Simon Guggenheim Foundation. Appointed on the basis of prior achievement and exceptional...

April 7, 2020 - 2:00 pm

Zoom

Sam Gershman, Harvard/CBMM

Abstract: In this talk, I will present a theory of reinforcement learning that falls in between "model-based" and "model-free" approaches. The key idea is to represent a "predictive map" of the environment, which can then be used to efficiently compute values. I show how such a map explains many...

April 2, 2020 - 10:00 am

A study on isolation’s neural underpinnings implies many may feel literally “starved” for contact amid the COVID-19 pandemic

By Lydia Denworth

Loneliness hurts. It is psychologically distressing and so physically unhealthy that being lonely increases the likelihood of an earlier death by 26 percent. But the feeling may serve a purpose. Psychologists theorize it hurts so much because, like hunger and thirst, loneliness acts as a biological alarm...

March 31, 2020 - 1:00 pm

Zoom Webinar - registration Required

Profs. Amnon Shashua and Shai Shalev-Shwartz, The Hebrew University of Jerusalem, Israel

Registration is required, please see details below.

Abstract: We present an analysis of a risk-based selective quarantine model where the population is divided into low and high-risk groups. The high-risk group is quarantined until the low-risk group achieves herd-immunity. We tackle the question...

Abstract: We present an analysis of a risk-based selective quarantine model where the population is divided into low and high-risk groups. The high-risk group is quarantined until the low-risk group achieves herd-immunity. We tackle the question...

March 16, 2020 - 4:00 pm

Singleton Auditorium

Lior Wolf, Tel Aviv University and Facebook AI Research.

Please note that this talk has been canceled.

We will reschedule his talk at the earliest convenience.

Abstract: Hypernetworks, also known as dynamic networks, are neural networks in which the weights of at least some of the layers vary dynamically based on the input. Such networks have...

We will reschedule his talk at the earliest convenience.

Abstract: Hypernetworks, also known as dynamic networks, are neural networks in which the weights of at least some of the layers vary dynamically based on the input. Such networks have...

March 10, 2020 - 4:00 pm

MIT 46-5165

There will be no meeting on Tues., March 10, 2020