July 18, 2019 - 4:00 pm

MIT 46-5165

Gemma Roig

Title:

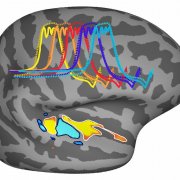

Task-specific Vision DNN Models and Their Relation for Explaining Different Areas of the Visual Cortex

Abstract:

Deep Neural Networks (DNNs) are state-of-the-art models for many vision tasks. We propose an approach to assess the relationship between visual tasks and their task-specific...

Task-specific Vision DNN Models and Their Relation for Explaining Different Areas of the Visual Cortex

Abstract:

Deep Neural Networks (DNNs) are state-of-the-art models for many vision tasks. We propose an approach to assess the relationship between visual tasks and their task-specific...

June 17, 2019 - 9:15 am

Learning to code involves recognizing how to structure a program, and how to fill in every last detail correctly. No wonder it can be so frustrating.

A new program-writing AI, SketchAdapt, offers a way out. Trained on tens of thousands of program examples, SketchAdapt learns how to compose short, high-level programs, while letting a second set of algorithms find the right sub-programs to fill in the details. Unlike similar approaches for...

June 11, 2019 - 4:00 pm

Katharina Dobs & Ratan Murty

Murty talk title: Does face selectivity arise without visual experience with faces in the human brain?

Dobs talk title: Testing functional segregation of face and object processing in deep convolutional neural networks

Dobs talk title: Testing functional segregation of face and object processing in deep convolutional neural networks

June 10, 2019 - 1:30 pm

Results of study involving primates suggest that speech and music may have shaped the human brain’s hearing circuits.

Monday, June 10, 2019

NIH | National Institutes of Health

In the eternal search for understanding what makes us human, scientists found that our brains are more sensitive to pitch, the harmonic sounds we hear when listening to music, than our evolutionary relative the macaque monkey. The study, funded in part by the National...

May 22, 2019 - 12:30 pm

ARLINGTON, VA, UNITED STATES

Story by Warren Duffie Office of Naval Research

How can the neuroscience behind emotion lead to new concepts of artificial intelligence? How does the human brain make fast decisions in real-world scenarios—and what impact does this have on human-to-human and human-machine interactions?

These are just some of the questions being pondered by the 10 scientists and engineers recently announced as members of the 2019...

May 14, 2019 - 4:00 pm

Duncan Stothers, Will Xiao, Nimrod Shaham

Duncan Stothers-

Title: Turing's Child Machine: A Deep Learning Model of Neural Development

Abstract:

Turing recognized development’s connection to intelligence when he proposed engineering a ‘child machine’ that becomes intelligent through a developmental process, instead of top-down hand-...

Title: Turing's Child Machine: A Deep Learning Model of Neural Development

Abstract:

Turing recognized development’s connection to intelligence when he proposed engineering a ‘child machine’ that becomes intelligent through a developmental process, instead of top-down hand-...

May 2, 2019 - 2:45 pm

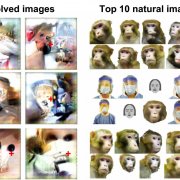

To find out which sights specific neurons in monkeys "like" best, researchers designed an algorithm, called XDREAM, that generated images that made neurons fire more than any natural images the researchers tested. As the images evolved, they started to look like distorted versions of real-world stimuli. The work appears May 2 in the journal Cell.

"When given this tool, cells began to increase their firing rate beyond levels we have seen before,...

May 2, 2019 - 1:30 pm

Study shows that artificial neural networks can be used to drive brain activity.

Anne Trafton | MIT News Office

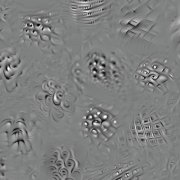

MIT neuroscientists have performed the most rigorous testing yet of computational models that mimic the brain’s visual cortex.

Using their current best model of the brain’s visual neural network, the researchers designed a new way to precisely control individual neurons and populations of neurons in the middle of that network. In an...

April 30, 2019 - 1:30 pm

Company announces $25 million, five-year collaboration.

MIT and Liberty Mutual Insurance today announced a $25 million, five-year collaboration to support artificial intelligence research in computer vision, computer language understanding, data privacy and security, and risk-aware decision making, among other topics.

The new collaboration launched today at a meeting between leadership from both institutions, including Liberty Mutual Chairman...

April 29, 2019 - 5:30 pm

by Sabbi Lall

Your ability to recognize objects is remarkable. If you see a cup under unusual lighting or from unexpected directions, there’s a good chance that your brain will still compute that it is a cup. Such precise object recognition is one holy grail for AI developers, such as those improving self-driving car navigation. While modeling primate object recognition in the visual cortex has revolutionized artificial visual recognition...

April 26, 2019 - 4:00 pm

Singleton Auditorium(46-3002)

Blake Richards, Assistant Professor, Associate Fellow of the Canadian Institute for Advanced Research (CIFAR)

Abstract:

Theoretical and empirical results in the neural networks literature demonstrate that effective learning at a real-world scale requires changes to synaptic weights that approximate the gradient of a global loss function. For neuroscientists, this means that the brain must have mechanisms...

Theoretical and empirical results in the neural networks literature demonstrate that effective learning at a real-world scale requires changes to synaptic weights that approximate the gradient of a global loss function. For neuroscientists, this means that the brain must have mechanisms...

April 23, 2019 - 12:00 pm

By Julio Cachila

Robots are very useful. They are used in industrial applications, manufacturing, and assembly. Now, a new system will teach them to do something more refined. Something like molding sticky rice into that edible little thing called sushi.

A group of MIT researchers, namely Yunzhu Li, Jiajun Wu, Russ Tedrake, Joshua B. Tenenbaum, and Antonio Torralba, have developed a system that improves a robot’s ability to mold certain...

April 16, 2019 - 4:00 pm

MIT 46-5165

Kohitij Kar

Title: Recurrent computations during visual object perception—investigating within and beyond the primate ventral stream

Abstract

Recurrent circuits are ubiquitous in the primate ventral stream, that supports core object recognition — primate’s ability to rapidly categorize objects. While...

Abstract

Recurrent circuits are ubiquitous in the primate ventral stream, that supports core object recognition — primate’s ability to rapidly categorize objects. While...

April 16, 2019 - 12:30 pm

PhD student Sarah Schwettmann explains how the study of visual perception can translate students’ creativity across domains.

by Connie Blaszczyk | Center for Art, Science, and Technology

Computational neuroscientist Sarah Schwettmann is one of three instructors behind the cross-disciplinary course 9.S52/9.S916 (Vision in Art and Neuroscience), which introduces students to core concepts in visual perception through the lenses of art and...

April 9, 2019 - 4:30 pm

Kevin Smith