Language and Vision

Overview

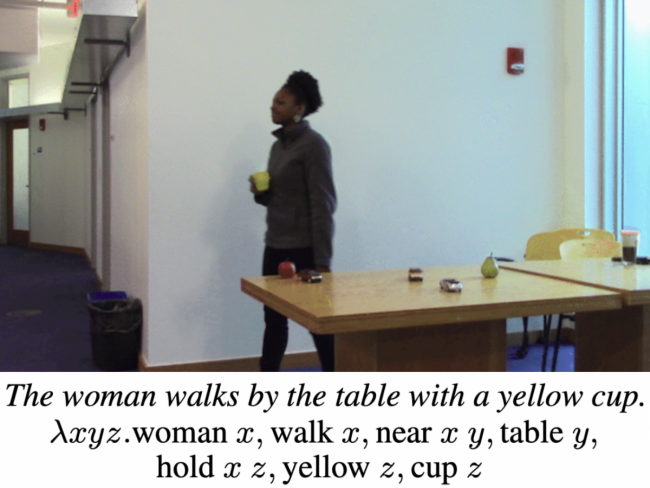

The ability to answer simple questions about the objects, agents, and actions portrayed in a visual scene, and the ability to use natural language commands to direct a robotic agent to navigate through a scene and manipulate objects, require the combination of visual and language understanding. This unit explores general frameworks for solving many tasks that rely on the integration of vision and language.

Videos

Further Study

Online Resources

Additional information about the speakers’ research and publications can be found at these websites:

- Boris Katz and Andrei Barbu: MIT InfoLab

- Andrei Barbu (MIT)

- Stefanie Tellex (Brown) and Humans to Robots Lab

- Richard Socher (You.com)

Readings

Berzak, Y., Huang, Y., Barbu, A., Korhonen, A., Katz, B. (2016) Anchoring and agreement in syntactic annotations, Proceedings of the 2016 Conference on Empirical Methods on Natural Language Processing, Austin, Texas

Kumar, A., Irsoy, O., Ondruska, P., Iyyer, M., Bradbury, J., Gulrajani, I., Zhong, V., Paulus, R., Socher, R. (2016) Ask me anything: Dynamic memory networks for natural language processing, 33rd International Conference on Machine Learning

Kuo, Y., Barbu, A., Katz, B. (2018) Deep sequential models for sampling-based planning, International Conference on Intelligent Robots and Systems, Madrid, Spain

Kuo, Y., Katz, B., Barbu, A. (2020) Deep compositional robotic planners that follow natural language commands, IEEE International Conference on Robotics and Automation, Paris, France

Patel, R., Pavlick, E., Tellex, S. (2020) Grounding language to non-markovian tasks with no supervision of task specifications, Proceedings of Robotics: Science and Systems

Ross, C., Barbu, A., Berzak, Y., Mayanganbayar, B., Katz, B (2018) Grounding language acquisition by training semantic parsers using captioned videos, Conference on Empirical Methods on Natural Language Processing, Brussels, Belgium

Tellex, S., Gopalan, N., Kress-Gazit, H., Matuszek, C. (2020) Robots that use language: A survey, Annual Review of Control, Robotics, and Autonomous Systems, 3, 1-35

Wang, C., Ross, C., Kuo, Y., Katz, B., Barbu, A. (2020) Learning a natural-language to LTL executable semantic parser for grounded robotics, 4th Conference on Robot Learning, Cambridge MA

Yu, H., Siddharth, N., Barbu, A., Siskind, J. M. (2015) A compositional framework for grounded language inference, generation, and acquisition in video, Journal of Artificial Intelligence Research, 52, 601-713

Xiong, C., Merity, S., Socher, R. (2016) Dynamic memory networks for visual and textual question answering, 33rd International Conference on Machine Learning